Articles tagged with bash tag

Yesterday I spent two very unpleasant hours debugging the weirdest SQL error I’ve seen in my life, running the below query (simplified for this post).

psql -qAt --no-psqlrc <<BACKUP

DO

$$

DECLARE r record;

BEGIN

RAISE INFO '%', 'info';

END

$$;

BACKUP

Running this in your terminal will result in a nasty syntax error.

ERROR: syntax error at or near "1111"

LINE 2: 1111

^

ERROR: syntax error at or near "RAISE"

LINE 2: RAISE INFO '%', 'info';

^

ERROR: syntax error at or near "1111"

LINE 2: 1111;

You stare on the screen for a while, absolutely sure that number 1111 is nowhere close to the data you work with. You try again. Another error. You save the code into a file and try again. It works. What the heck? You try again using the bash heredoc. Another failure.

The minute you realize $$ is being substituted with the ID of the current process, you feel like the dumbest person on Earth. Yet the happiest one at the same time.

The solution is trivial.

psql -qAt --no-psqlrc <<BACKUP

DO

\$\$

DECLARE r record;

BEGIN

RAISE INFO '%', 'info';

END

\$\$;

BACKUP

As I’m still running Ubuntu 16.04 based Linux Mint, I have no access to GDAL 2.x repositories (except for ubuntugis, that I really don’t like to use). Provided with a GeoPackage raster file recently, I had to find a way to load it into QGIS, somehow. The solution is simple: Docker with gdal_translate.

Preparing the Docker container

I like using Docker for experiments that might leave the OS in an unexpected state (which is exactly what happens to me with ubuntugis repository whenever I use it. That’s why I don’t anymore.). A very simple Dockerfile keeps the troubles away from you.

FROM ubuntu:17.04

RUN apt update

RUN apt install -y gdal-bin

cd into the folder and build the image with docker build -t gdal .. Once ready, summon the daemon, run the container, mount the GeoPackage file to the container directory and you’re ready to rock.

docker run -v /path/to/geopackage:/home/ -it gdal

Raster GeoPackage to GeoTiff translation

With the container running, the raster GeoPackage to GeoTiff translation can be done easily with gdal_translate. Note I chose to cut the source file into tiles, because the gdal_translate was choking about the resulting size.

#!/bin/bash

SIZE=10000

ULX=-630000

ULY=-1135450

LRX=-560000

LRY=-1172479

COUNTER_X=0

COUNTER_Y=0

while [[ $ULX -lt $LRX ]]

do

while [[ $ULY -gt $LRY ]]

do

echo $ULX, $(($ULX+$SIZE)), $ULY, $(($ULY-$SIZE))

gdal_translate \

-co TILED=YES \

-co COMPRESS=DEFLATE \

-co TFW=YES \

-co NUM_THREADS=ALL_CPUS \

-a_nodata 0 \

-of GTiff \

-projwin $ULX, $ULY, $(($ULX+$SIZE)), $(($ULY-$SIZE)) \

-projwin_srs EPSG:5514 \

data/detected.gpkg data/detected_${COUNTER_X}_${COUNTER_Y}.tiff

ULY=$(($ULY-$SIZE))

COUNTER_Y=$((COUNTER_Y+1))

done

ULX=$(($ULX+$SIZE))

ULY=-1135450

COUNTER_X=$((COUNTER_X+1))

done

Final Touch: Raster to Vector

After the GeoTiff is written to hard drive, inotifywait can be used to generate overviews. And with ease of calling gdal_polygonize.py on each of GeoTiffs…vector layer, at you service.

Speaking the Czech Republic, Prague is an undoubted leader in open data publishing. However, there is no public API to explore/search existing datasets.

I wanted to download the ESRI Shapefile of the city urban plan that is divided into more than a hundred files (a file representing a cadastral area).

This becomes a piece of cake with Opera Developer tools and a bit of JavaScript code

let links = document.getElementsByClassName('open-data-icon-rastr open-data-link tooltipstered')

for (let link of links) {

if (link.href.indexOf('SHP') === -1) { continue;}console.log(link.href)

}

With the list saved to a file called list.txt, wget --input-file=list.txt will download the data. Followed by for f in *.zip; do unzip $f -d ${f%%.zip}; done, each archive will be extracted in the directory called by its name.

Once done and assuming that the files are named consistently across the folders, ogr2ogr will merge all of them into a single GeoPackage file, resulting in just four files. Not bad considered I began with more than a hundred × 4.

ogr2ogr -f "GPKG" pvp_fvu_p.gpkg ./PVP_fvu_p_Bechovice_SHP/PVP_fvu_p.shp

find -type f -not -path './PVP_fvu_p_Bechovice_SHP*' -iname '*fvu_p.shp' -exec ogr2ogr -update -append -f "GPKG" pvp_fvu_p.gpkg '{}' \;

ogr2ogr -f "GPKG" pvp_fvu_popis_z_a.gpkg ./PVP_fvu_p_Bechovice_SHP/PVP_fvu_popis_z_a.shp

find -type f -not -path './PVP_fvu_p_Bechovice_SHP*' -iname '*fvu_popis_z_a.shp' -exec ogr2ogr -update -append -f "GPKG" pvp_fvu_popis_z_a.gpkg '{}' \;

ogr2ogr -f "GPKG" pvp_pp_pl_a.gpkg ./PVP_fvu_p_Bechovice_SHP/PVP_pp_pl_a.shp

find -type f -not -path './PVP_fvu_p_Bechovice_SHP*' -iname '*pp_pl_a.shp' -exec ogr2ogr -update -append -f "GPKG" pvp_pp_pl_a.gpkg '{}' \;

ogr2ogr -f "GPKG" pvp_pp_s_a.gpkg ./PVP_fvu_p_Bechovice_SHP/PVP_pp_s_a.shp

find -type f -not -path './PVP_fvu_p_Bechovice_SHP*' -iname '*pp_s_a.shp' -exec ogr2ogr -update -append -f "GPKG" pvp_pp_s_a.gpkg '{}' \;

A boring task that would take me hours five years ago transformed into simple, yet fun, piece of work done in no more than half an hour.

Imagine you run two database machines hosting structurally the same databases on two separate servers and you need to transfer data from one to another. Not very often, let’s say once a month. Your tables aren’t small nor huge, let’s say millions rows in general.

You’re going to use pg_dump and pipe it to psql, but the indices on your tables will slow you down a lot.

That’s why you’ll want to drop all indices and constraints (drop_indices_constraints.sql):

SELECT 'ALTER TABLE ' ||

tc.table_schema ||

'.' ||

tc.table_name ||

' DROP CONSTRAINT ' ||

tc.constraint_name ||

';'

FROM information_schema.table_constraints tc

JOIN information_schema.constraint_column_usage ccu ON (tc.constraint_catalog = ccu.constraint_catalog AND tc.constraint_schema = ccu.constraint_schema AND tc.constraint_name = ccu.constraint_name)

WHERE tc.table_schema IN (SELECT unnest(string_to_array(:'schemas', ',')))

UNION ALL

SELECT

'DROP INDEX IF EXISTS ' || schemaname || '.' || indexname || ';'

FROM pg_indexes

WHERE schemaname IN (SELECT unnest(string_to_array(:'schemas', ',')));

Then you will transfer the data:

pg_dump -a -t "schema1.*" -t "schema2.*" -O -d source -v | psql -h localhost -d target

And restore the already dropped indices and constraints (create_indices_constraints.sql):

WITH constraints AS (

SELECT 'ALTER TABLE ' ||

tc.table_schema ||

'.' ||

tc.table_name ||

' ADD CONSTRAINT ' ||

tc.constraint_name ||

' ' ||

tc.constraint_type ||

'(' ||

string_agg(ccu.column_name::text, ', ')|| -- column order should be taken into account here

');' def,

tc.table_schema,

tc.table_name,

tc.constraint_name

FROM information_schema.table_constraints tc

JOIN information_schema.constraint_column_usage ccu ON (tc.constraint_catalog = ccu.constraint_catalog AND tc.constraint_schema = ccu.constraint_schema AND tc.constraint_name = ccu.constraint_name)

WHERE tc.table_schema IN (SELECT unnest(string_to_array(:'schemas', ',')))

AND tc.constraint_type = 'PRIMARY KEY'

GROUP BY

tc.table_schema,

tc.table_name,

tc.constraint_name,

tc.constraint_type

)

SELECT def FROM constraints

UNION ALL

SELECT indexdef || ';'

FROM pg_indexes

WHERE schemaname IN (SELECT unnest(string_to_array(:'schemas', ',')))

AND NOT EXISTS (

SELECT 1 FROM

constraints c

WHERE pg_indexes.schemaname = c.table_schema

AND pg_indexes.tablename = c.table_name

AND pg_indexes.indexname = c.constraint_name

);

Few sidenotes

- Run the second piece of code first. If you forget, run that code on the source database.

- Notice the

:schemas. Variable assignment is one of the psql features I really like.

- Notice

DROP INDEX IF EXISTS and the CTE used in the drop code - that’s due to the fact that dropping the constraint obviously drops the underlying index as well and you don’t want to dropping something that doesn’t exist or creating something that exists already.

The bash script proposal might look as follows:

# store indices and constraint definitions

psql -qAt -d target -v schemas='schema1','schema2' -f create_indices_constraints.sql > create.sql

# drop indices and constraints

psql -qAt -d target -v schemas='schema1','schema2' -f drop_indices_constraints.sql | psql -d target

# load data

pg_dump -a -t "schema1.*" -t "schema2.*" -O -d source -v | psql -h localhost -d target

#renew indices and constraints

psql -qAt -d target -f create.sql

The Digital Elevation Model over Europe (EU-DEM) has been recently released for public usage at Copernicus Land Monitoring Services homepage. Strictly speaking, it is a digital surface model coming from weighted average of SRTM and ASTER GDEM with geographic accuracy of 25 m. Data are provided as GeoTIFF files projected in 1 degree by 1 degree tiles (projected to EPSG:3035), so they correspond to the SRTM naming convention.

If you can’t see the map to choose the data to download, make sure you’re not using HTTPS Everywhere or similar browser plugin.

I chose Austria to play with the data.

Obtaining the data

It’s so easy I doubt it’s even worth a word. Get zipped data with wget, extract them to a directory.

wget https://cws-download.eea.europa.eu/in-situ/eudem/eu-dem/EUD_CP-DEMS_4500025000-AA.rar -O dem.rar

unrar dem.rar -d copernicus

cd copernicus

Hillshade and color relief

Use GDAL to create hillshade with a simple command. No need to use -s flag to convert units, it already comes in meters. Exaggerate heights a bit with -z flag.

gdaldem hillshade EUD_CP-DEMS_4500025000-AA.tif hillshade.tif -z 3

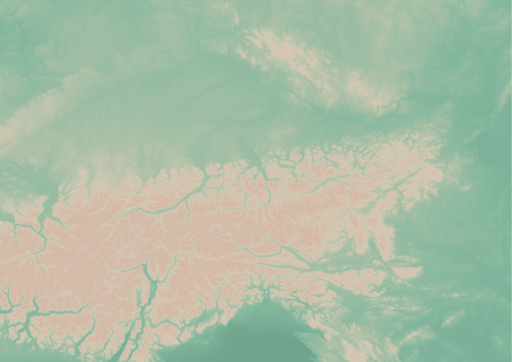

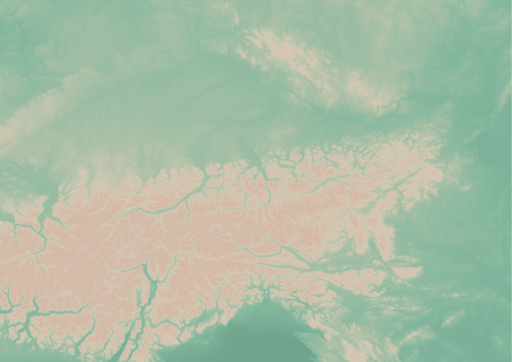

And here comes the Alps.

To create a color relief you need a ramp of heights with colors. “The Development and Rationale of Cross-blended Hypsometric Tints” by T. Patterson and B. Jenny is a great read on hypsometric tints. They also give advice on what colors to choose in different environments (see the table at the last page of the article). I settled for warm humid color values.

| Elevation [m] |

Red |

Green |

Blue |

| 5000 |

220 |

220 |

220 |

| 4000 |

212 |

207 |

204 |

| 3000 |

212 |

193 |

179 |

| 2000 |

212 |

184 |

163 |

| 1000 |

212 |

201 |

180 |

| 600 |

169 |

192 |

166 |

| 200 |

134 |

184 |

159 |

| 50 |

120 |

172 |

149 |

| 0 |

114 |

164 |

141 |

I created a color relief with another GDAL command.

gdaldem color-relief EUD_CP-DEMS_4500025000-AA.tif ramp_humid.txt color_relief.tif

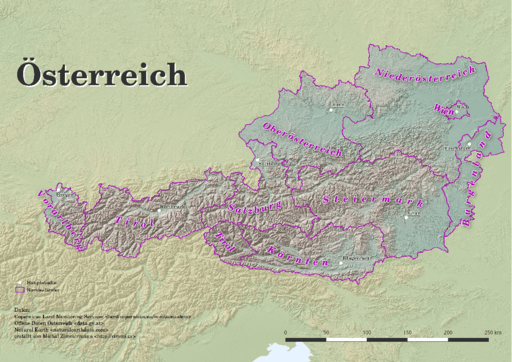

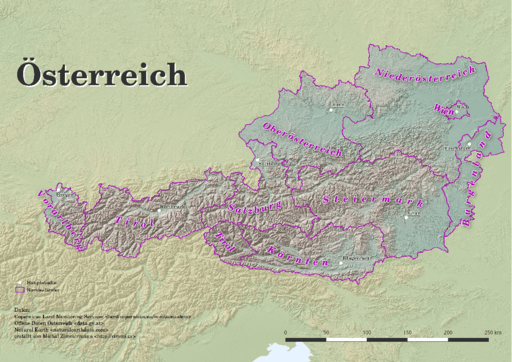

And here comes hypsometric tints.

Add a bit of compression and some overviews to make it smaller and load faster.

gdal_translate -of GTiff -co TILED=YES -co COMPRESS=DEFLATE color_relief.tif color_relief.compress.tif

gdal_translate -of GTiff -co TILED=YES -co COMPRESS=DEFLATE hillshade.tif hillshade.compress.tif

rm color_relief.tif

rm hillshade.tif

mv color_relief.compress.tif color_relief.tif

mv hillshade.compress.tif hillshade.tif

gdaladdo color_relief.tif 2 4 8 16

gdaladdo hillshade.tif 2 4 8 16

Map composition

I chose Austria for its excessive amount of freely available datasets. What I didn’t take into consideration was my lack of knowledge when it comes to German (#fail). States come from data.gv.at and was dissolved from smaller administrative units. State capitals were downloaded from naturalearth.com.

I’d like to add some more thematic layers in the future. And translate the map to English.

Few words on INSPIRE Geoportal

INSPIRE Geoportal should be the first place you go to search for European spatial data (at last EU thinks so). I used it to find data for this map and it was a very frustrating experience. It was actually more frustrating than using Austrian open data portal in German. Last news are from May 21, 2015, but the whole site looks and feels like deep 90s or early 2000 at least.