Articles tagged with postgresql tag

Imagine you run two database machines hosting structurally the same databases on two separate servers and you need to transfer data from one to another. Not very often, let’s say once a month. Your tables aren’t small nor huge, let’s say millions rows in general.

You’re going to use pg_dump and pipe it to psql, but the indices on your tables will slow you down a lot.

That’s why you’ll want to drop all indices and constraints (drop_indices_constraints.sql):

SELECT 'ALTER TABLE ' ||

tc.table_schema ||

'.' ||

tc.table_name ||

' DROP CONSTRAINT ' ||

tc.constraint_name ||

';'

FROM information_schema.table_constraints tc

JOIN information_schema.constraint_column_usage ccu ON (tc.constraint_catalog = ccu.constraint_catalog AND tc.constraint_schema = ccu.constraint_schema AND tc.constraint_name = ccu.constraint_name)

WHERE tc.table_schema IN (SELECT unnest(string_to_array(:'schemas', ',')))

UNION ALL

SELECT

'DROP INDEX IF EXISTS ' || schemaname || '.' || indexname || ';'

FROM pg_indexes

WHERE schemaname IN (SELECT unnest(string_to_array(:'schemas', ',')));

Then you will transfer the data:

pg_dump -a -t "schema1.*" -t "schema2.*" -O -d source -v | psql -h localhost -d target

And restore the already dropped indices and constraints (create_indices_constraints.sql):

WITH constraints AS (

SELECT 'ALTER TABLE ' ||

tc.table_schema ||

'.' ||

tc.table_name ||

' ADD CONSTRAINT ' ||

tc.constraint_name ||

' ' ||

tc.constraint_type ||

'(' ||

string_agg(ccu.column_name::text, ', ')|| -- column order should be taken into account here

');' def,

tc.table_schema,

tc.table_name,

tc.constraint_name

FROM information_schema.table_constraints tc

JOIN information_schema.constraint_column_usage ccu ON (tc.constraint_catalog = ccu.constraint_catalog AND tc.constraint_schema = ccu.constraint_schema AND tc.constraint_name = ccu.constraint_name)

WHERE tc.table_schema IN (SELECT unnest(string_to_array(:'schemas', ',')))

AND tc.constraint_type = 'PRIMARY KEY'

GROUP BY

tc.table_schema,

tc.table_name,

tc.constraint_name,

tc.constraint_type

)

SELECT def FROM constraints

UNION ALL

SELECT indexdef || ';'

FROM pg_indexes

WHERE schemaname IN (SELECT unnest(string_to_array(:'schemas', ',')))

AND NOT EXISTS (

SELECT 1 FROM

constraints c

WHERE pg_indexes.schemaname = c.table_schema

AND pg_indexes.tablename = c.table_name

AND pg_indexes.indexname = c.constraint_name

);

Few sidenotes

- Run the second piece of code first. If you forget, run that code on the source database.

- Notice the

:schemas. Variable assignment is one of the psql features I really like.

- Notice

DROP INDEX IF EXISTS and the CTE used in the drop code - that’s due to the fact that dropping the constraint obviously drops the underlying index as well and you don’t want to dropping something that doesn’t exist or creating something that exists already.

The bash script proposal might look as follows:

# store indices and constraint definitions

psql -qAt -d target -v schemas='schema1','schema2' -f create_indices_constraints.sql > create.sql

# drop indices and constraints

psql -qAt -d target -v schemas='schema1','schema2' -f drop_indices_constraints.sql | psql -d target

# load data

pg_dump -a -t "schema1.*" -t "schema2.*" -O -d source -v | psql -h localhost -d target

#renew indices and constraints

psql -qAt -d target -f create.sql

PostgreSQL foreign data wrappers are used to connect PostgreSQL database to different datasources, e.g. other SQL databases, CSV files, XLS spreadsheets×

The one I’ve been interested in for several months is Paul Ramsey’s OGR FDW - it gives you access to OGR supported spatial formats directly from your database. No more shapefiles lying around?

Each foreign data wrapper should have three basic components:

- foreign server object

- foreign user mapping - not necessary if you’re not connecting to other database

- foreign table(s)

I got some data about rivers and dams from DIBAVOD open datasets to play with.

First define the foreign server object:

CREATE SERVER dibavod

FOREIGN DATA WRAPPER ogr_fdw

OPTIONS (

datasource '/downloads/dibavod',

format 'ESRI Shapefile',

config_options 'SHAPE_ENCODING=CP1250'

);

Note the OGR specific driver configuration options are available inside config_options. In case of ESRI Shapefiles, the datasource is the directory your files reside in.

Let’s create PostgreSQL tables (use ogrinfo or Paul’s ogr_fdw_info to list the columns):

CREATE FOREIGN TABLE rivers (

fid integer,

utokj_id numeric,

utokjn_id numeric,

utokjn_f numeric,

prprop_z integer,

ex_jh integer,

pozn text,

shape_leng numeric,

naz_tok text,

idvt integer,

tok_id numeric,

shape_len numeric,

geom geometry(LINESTRING, 5514)

)

SERVER dibavod

OPTIONS (layer 'A02_Vodni_tok_JU');

CREATE FOREIGN TABLE dams (

fid integer,

objectid integer,

naz_na text,

nadr_gid numeric,

kota_hladi numeric,

hloubka numeric,

zatop_ploc numeric,

objem numeric,

kota_hraz numeric,

kota_preli numeric,

kota_vypus numeric,

plocha_m2 numeric,

shape_area numeric,

shape_len numeric,

geom geometry(MULTIPOLYGON, 5514)

)

SERVER dibavod

OPTIONS (LAYER 'A05_Vodni_nadrze');

Note the fid column - required for write access to underlying datasource.

Things to remember:

- foreign tables mean no constraints nor indices

- no indices mean spatial queries are terribly slow compared to PostGIS

- I like the idea of

CREATE UNLOGGED TABLE dams2 AS SELECT * FROM dams, not sure what to use it for though

Using JOIN clause

All my GIS life I’ve been using a simple JOIN clause to find a row with an id = previous_id + 1. In other words, imagine a simple table with no indices:

CREATE TABLE test (id integer);

INSERT INTO test SELECT i FROM generate_series(1,10000000) i;

Let’s retrieve next row for each row in that table:

SELECT a.id, b.id

FROM test a

LEFT JOIN test b ON (a.id + 1 = b.id); -- note the LEFT JOIN is needed to get the last row as well

Execution plan looks like this:

Hash Join (cost=311087.17..953199.41 rows=10088363 width=8) (actual time=25440.770..79591.869 rows=10000000 loops=1)

Hash Cond: ((a.id + 1) = b.id)

-> Seq Scan on test a (cost=0.00..145574.63 rows=10088363 width=4) (actual time=0.588..10801.584 rows=10000001 loops=1)

-> Hash (cost=145574.63..145574.63 rows=10088363 width=4) (actual time=25415.282..25415.282 rows=10000001 loops=1)

Buckets: 16384 Batches: 128 Memory Usage: 2778kB

-> Seq Scan on test b (cost=0.00..145574.63 rows=10088363 width=4) (actual time=0.422..11356.108 rows=10000001 loops=1)

Planning time: 0.155 ms

Execution time: 90134.248 ms

If we add an index with CREATE INDEX ON test (id), the plan changes:

Merge Join (cost=0.87..669369.85 rows=9999844 width=8) (actual time=0.035..56219.294 rows=10000001 loops=1)

Merge Cond: (a.id = b.id)

-> Index Only Scan using test_id_idx on test a (cost=0.43..259686.10 rows=9999844 width=4) (actual time=0.015..11101.937 rows=10000001 loops=1)

Heap Fetches: 0

-> Index Only Scan using test_id_idx on test b (cost=0.43..259686.10 rows=9999844 width=4) (actual time=0.012..11827.895 rows=10000001 loops=1)

Heap Fetches: 0

Planning time: 0.244 ms

Execution time: 65973.421 ms

Not bad.

Using window function

Window functions are real fun. They’re great if you’re doing counts, sums or ranks by groups. And, to my surprise, they’re great in finding next rows as well.

With the same test table, we retrieve next row for each row with the following query:

SELECT id, lead(id) OVER (ORDER BY id)

FROM test.test;

How does that score without an index? Better than the JOIN clause.

WindowAgg (cost=1581246.90..1756294.50 rows=10002720 width=4) (actual time=28785.388..63819.071 rows=10000001 loops=1)

-> Sort (cost=1581246.90..1606253.70 rows=10002720 width=4) (actual time=28785.354..40117.899 rows=10000001 loops=1)

Sort Key: id

Sort Method: external merge Disk: 136848kB

-> Seq Scan on test (cost=0.00..144718.20 rows=10002720 width=4) (actual time=0.020..10797.961 rows=10000001 loops=1)

Planning time: 0.242 ms

Execution time: 73391.024 ms

And it works even better if indexed. It’s actually ~1,5× faster than the JOIN way.

WindowAgg (cost=0.43..409770.03 rows=10002720 width=4) (actual time=0.087..35647.815 rows=10000001 loops=1)

-> Index Only Scan using test_id_idx on test (cost=0.43..259729.23 rows=10002720 width=4) (actual time=0.059..11310.879 rows=10000001 loops=1)

Heap Fetches: 0

Planning time: 0.247 ms

Execution time: 45388.202 ms

It reads well and the purpose of such a query is pretty obvious.

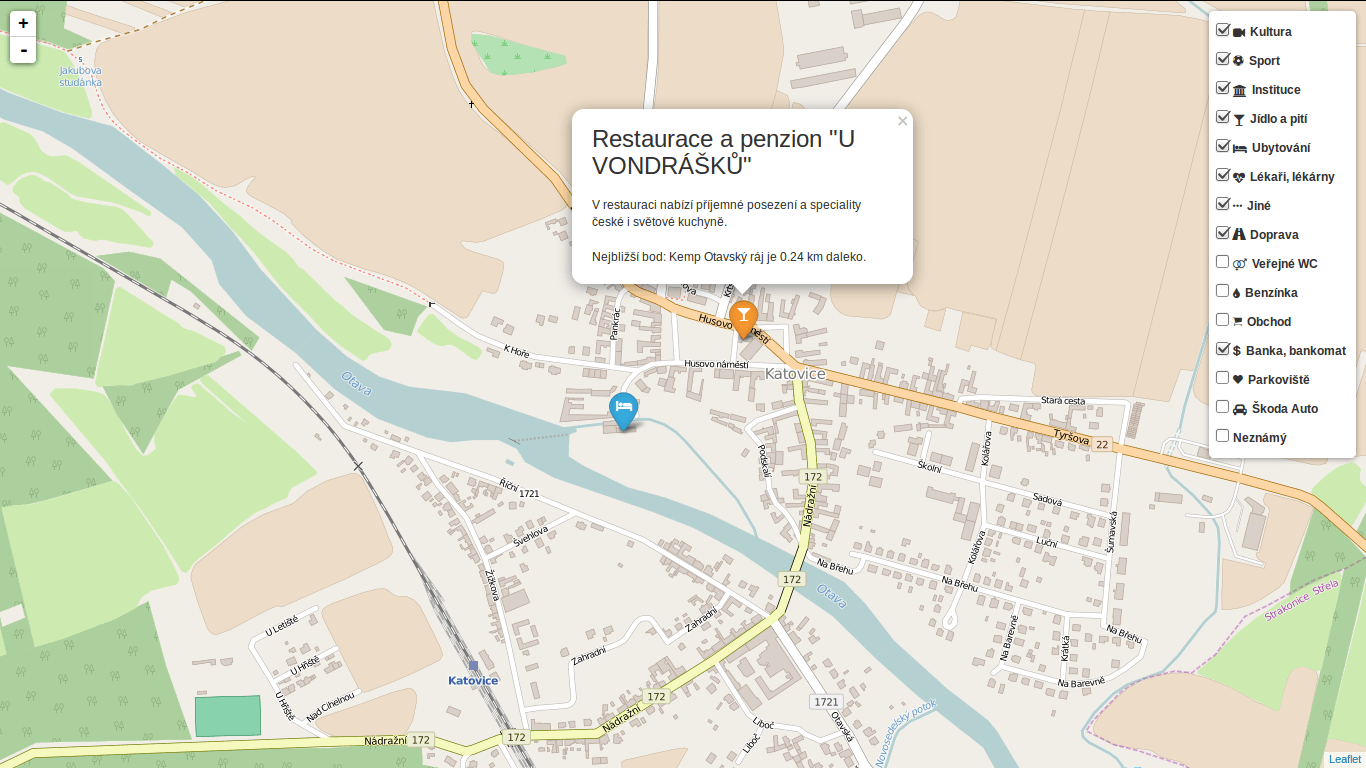

After a while I got back to my PostGIS open data case study. Last time I left it with clustering implemented, looking forward to incorporate Turf.js in the future. And the future is now. The code is still available on GitHub.

Subgroup clustering

Vozejkmap data is categorized based on the place type (banks, parking lots, pubs, …). One of the core features of map showing such data should be the easy way to turn these categories on and off.

As far as I know, it’s not trivial to do this with the standard Leaflet library. Extending L.control.layers and implement its addOverlay, removeOverlay methods on your own might be the way to add needed behavior. Fortunately, there’s an easier option thanks to Leaflet.FeatureGroup.SubGroup that can handle such use case and is really straightforward. See the code below.

cluster = L.markerClusterGroup({

chunkedLoading: true,

chunkInterval: 500

});

cluster.addTo(map);

...

for (var category in categories) {

// just use L.featureGroup.subGroup instead of L.layerGroup or L.featureGroup

overlays[my.Style.set(category).type] = L.featureGroup.subGroup(cluster, categories[category]);

}

mapkey = L.control.layers(null, overlays).addTo(map);

With this piece of code you get a map key with checkboxes for all the categories, yet they’re still kept in the single cluster on the map. Brilliant!

Using Turf.js for analysis

Turf is one of those libraries I get amazed easily with, spending a week trying to find a use case, finally putting it aside with “I’ll get back to it later”. I usually don’t. This time it’s different.

I use Turf to get the nearest neighbor for any marker on click. My first try ended up with the same marker being the result as it was a member of a feature collection passed to turf.nearest() method. After snooping around the docs I found turf.remove() method that can filter GeoJSON based on key-value pair.

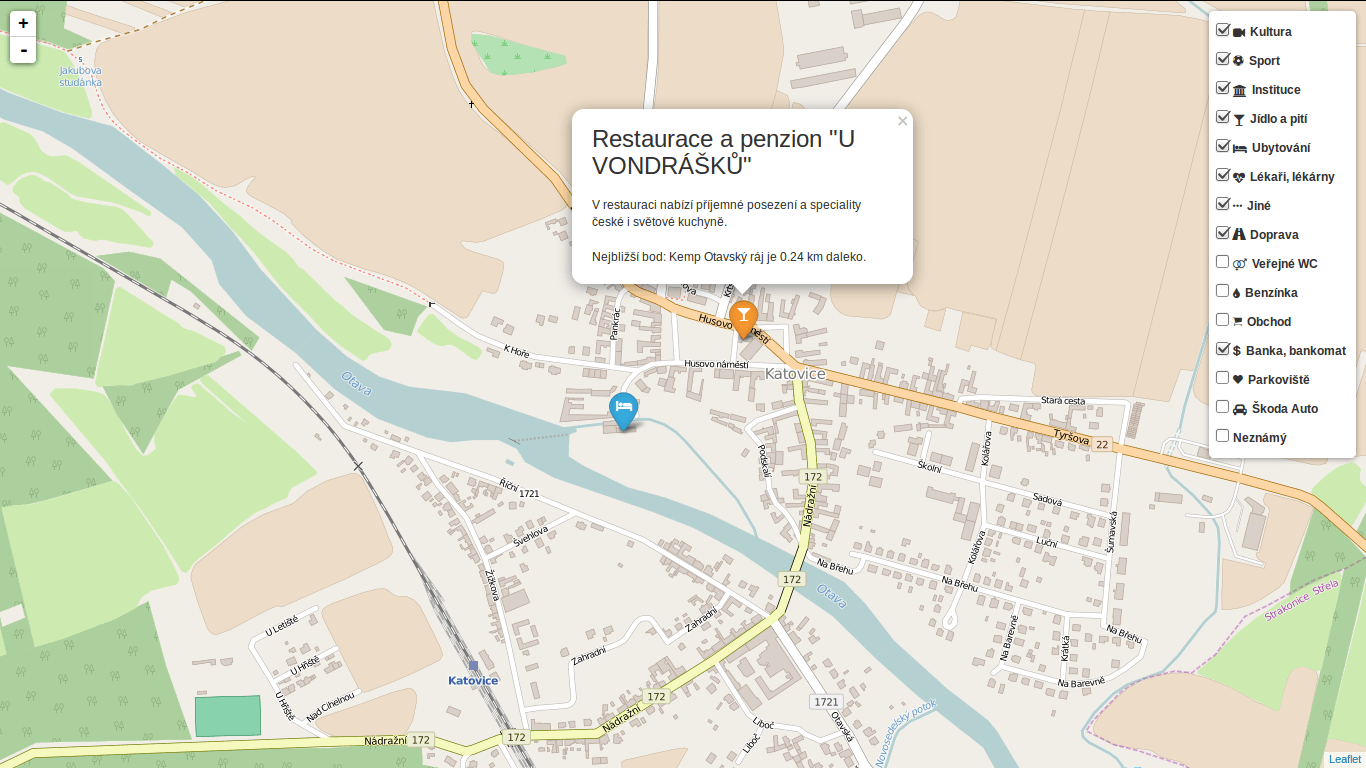

Another handy function is turf.distance() that gives you distance between two points. The code below adds an information about the nearest point and its distance into the popup.

// data is a geojson feature collection

json = L.geoJson(data, {

onEachFeature: function(feature, layer) {

layer.on("click", function(e) {

var nearest = turf.nearest(layer.toGeoJSON(), turf.remove(data, "title", feature.properties.title)),

distance = turf.distance(layer.toGeoJSON(), nearest, "kilometers").toPrecision(2),

popup = L.popup({offset: [0, -35]}).setLatLng(e.latlng),

content = L.Util.template(

"<h1>{title}</h1><p>{description}</p> \

<p>Nejbližší bod: {nearest} je {distance} km daleko.</p>", {

title: feature.properties.title,

description: feature.properties.description,

nearest: nearest.properties.title,

distance: distance

});

popup.setContent(content);

popup.openOn(map);

...

From what I’ve tried so far, Turf seems to be incredibly fast and easy to use. I’ll try to find the nearest point for any of the categories, that could take Turf some time.

Update

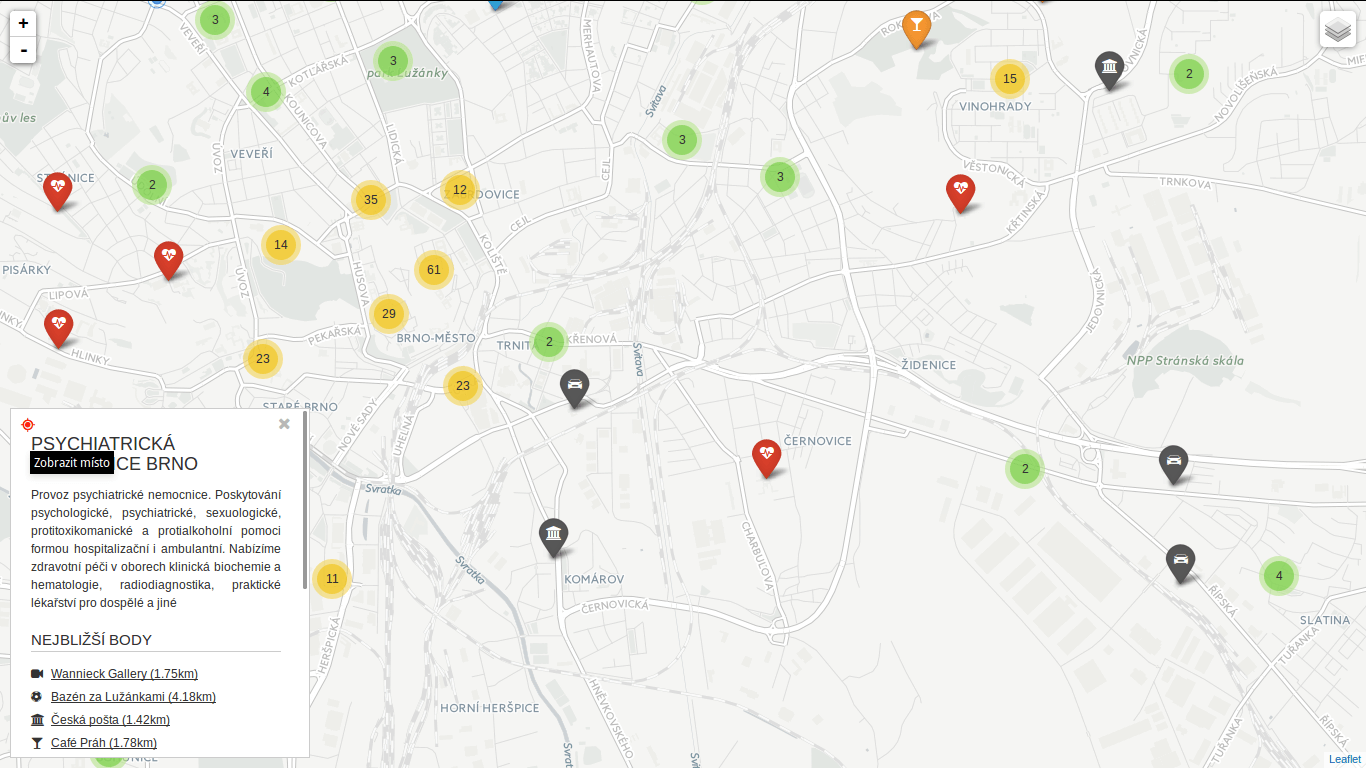

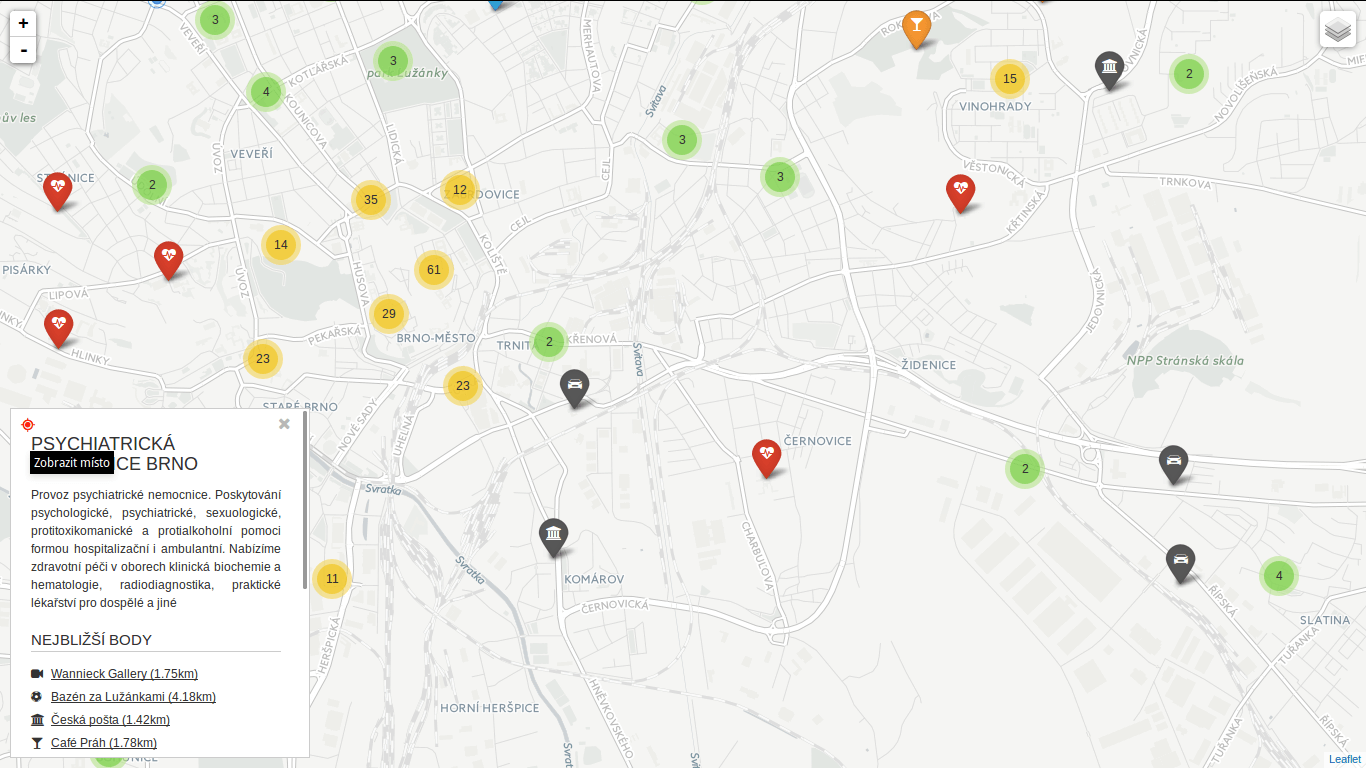

Turf is blazing fast! I’ve implemented nearest point for each of the categories and it gets done in a blink of an eye. Some screenshots below. Geolocation implemented as well.

You can locate the point easily.

You can locate the point easily.

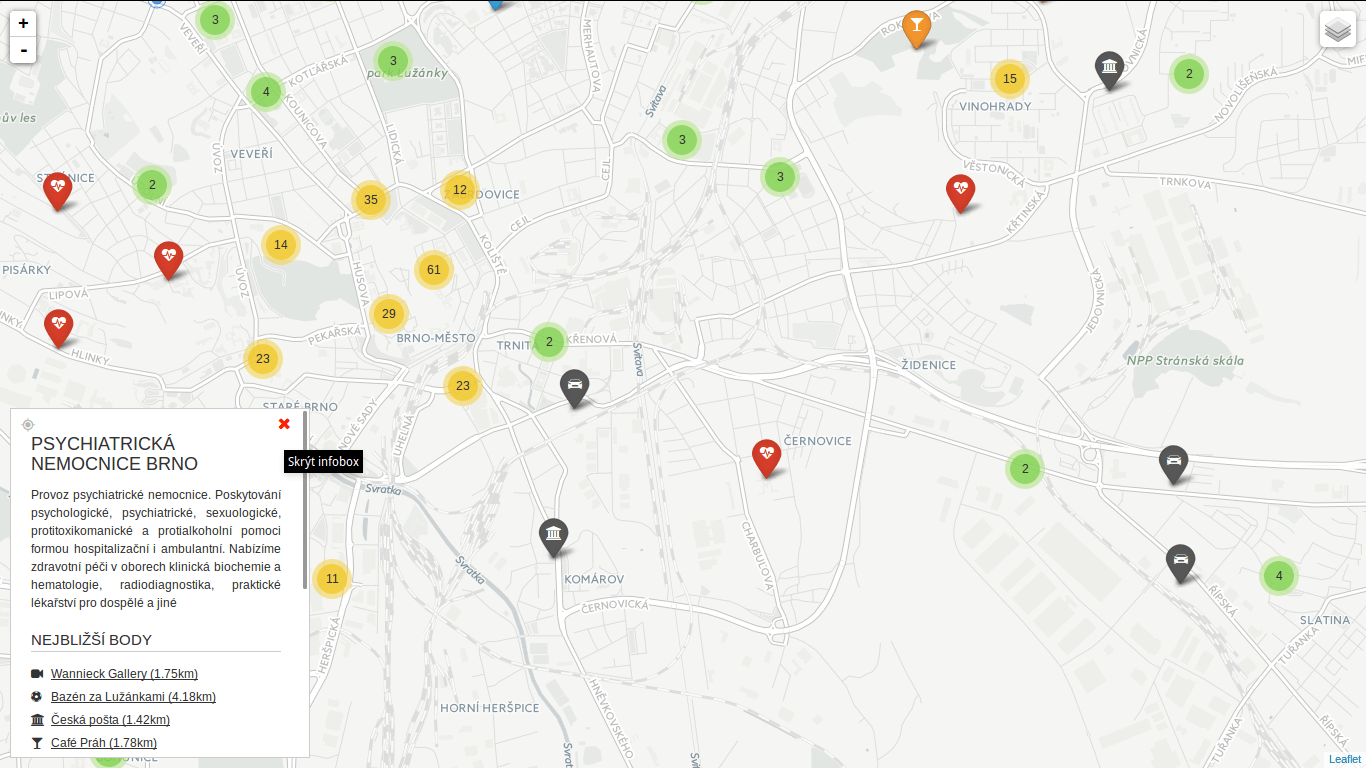

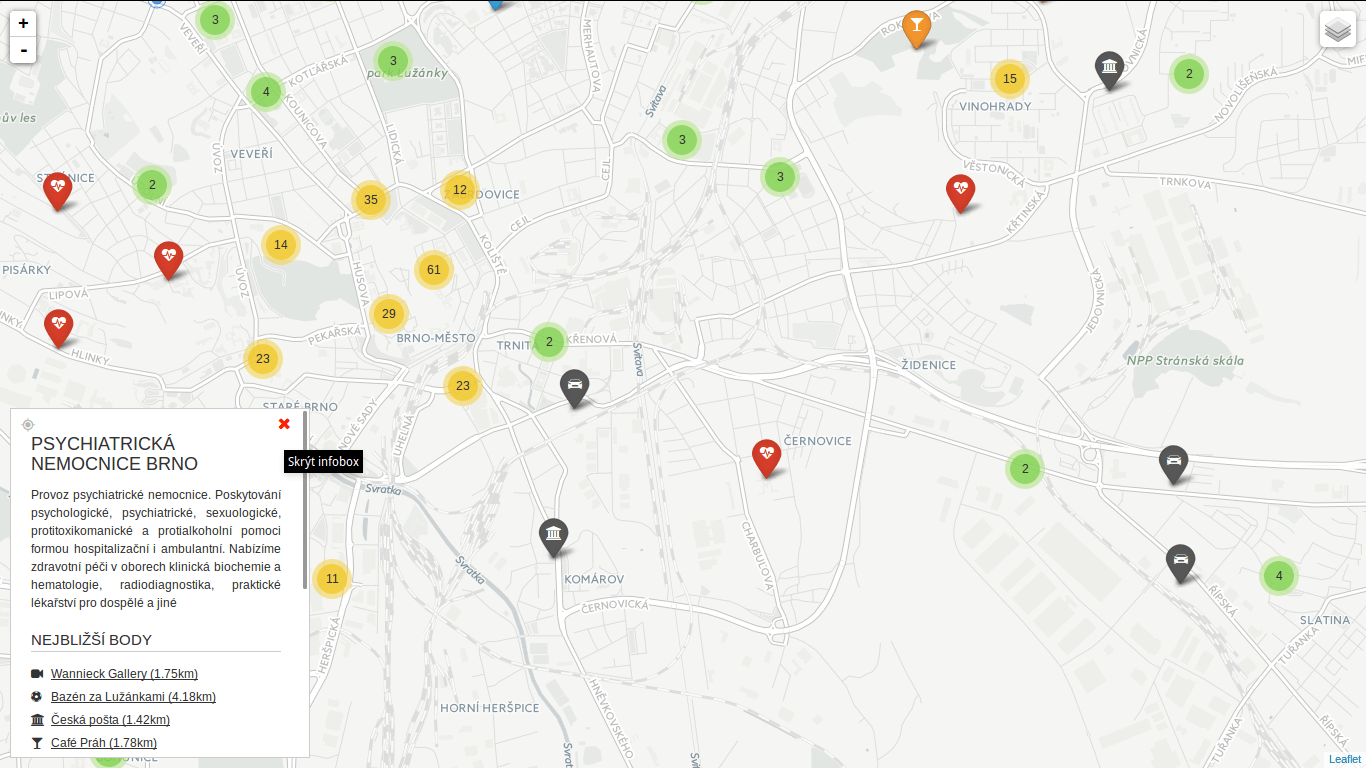

You can hide the infobox.

You can hide the infobox.

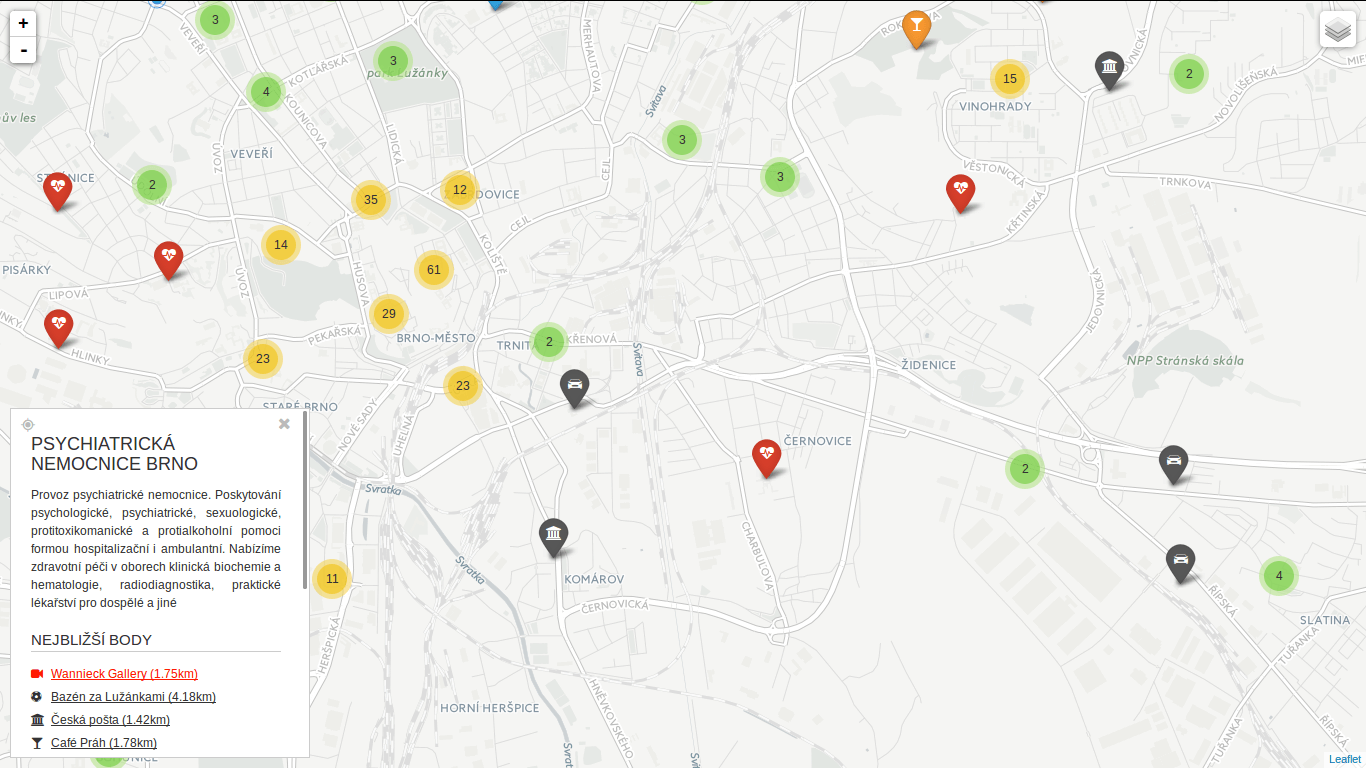

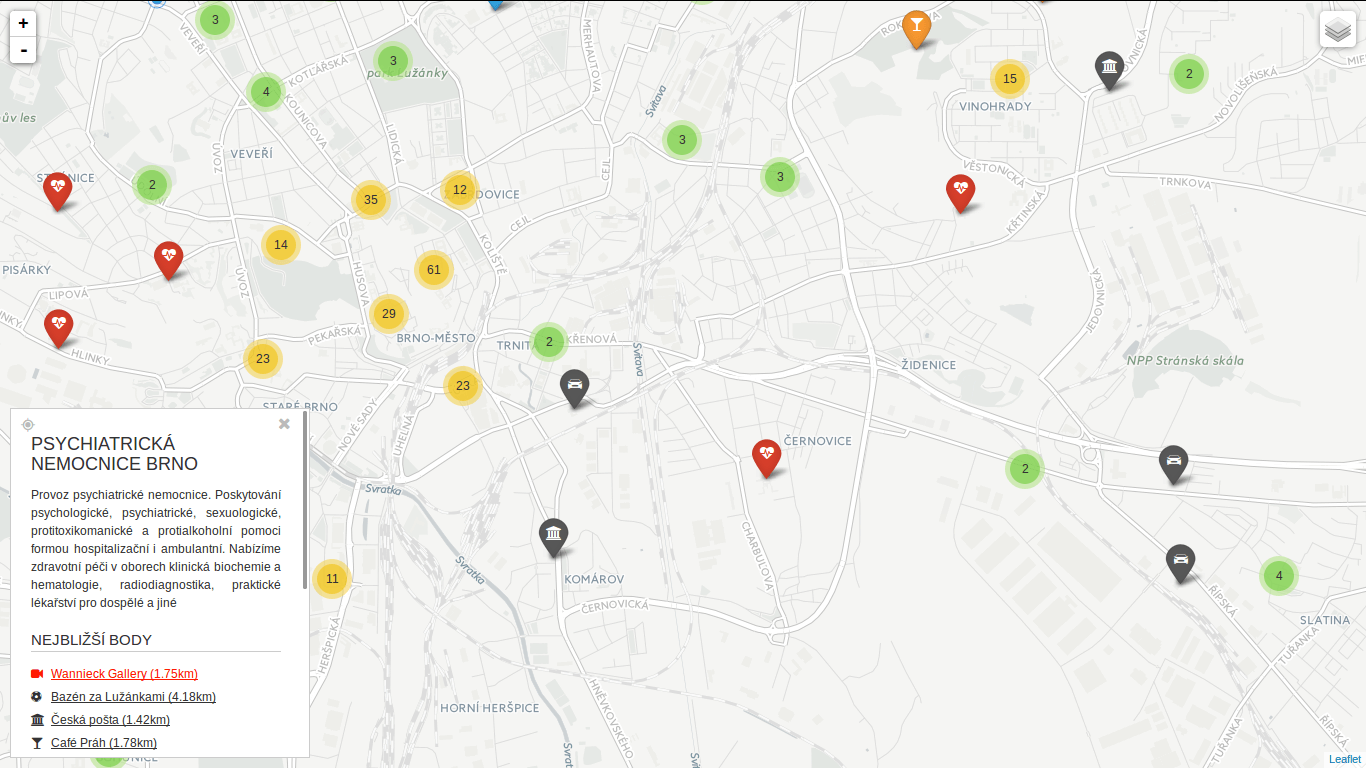

You can jump to any of the nearest places.

You can jump to any of the nearest places.

I’ve seen a bunch of questions on GIS StackExchange recently related to SFCGAL extension for PostGIS 2.2. Great news are it can be installed with one simple query CREATE EXTENSION postgis_sfcgal. Not so great news are you have to compile it from source for Ubuntu-based OS (14.04) as recent versions of required packages are not available in the repositories.

I tested my solution on elementary OS 0.3.1 based on Ubuntu 14.04. And it works! It installs PostgreSQL 9.4 from repositories together with GDAL and GEOS and some other libs PostGIS depends on. PostGIS itself, CGAL, Boost, MPFR and GMP are built from source.

Here comes the code (commented where needed).

sudo -i

echo "deb http://apt.postgresql.org/pub/repos/apt/ trusty-pgdg main" | tee -a /etc/apt/sources.list

wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add -

apt-get update

apt-get install -y postgresql-9.4 \

postgresql-client-9.4 \

postgresql-contrib-9.4 \

libpq-dev \

postgresql-server-dev-9.4 \

build-essential \

libgeos-c1 \

libgdal-dev \

libproj-dev \

libjson0-dev \

libxml2-dev \

libxml2-utils \

xsltproc \

docbook-xsl \

docbook-mathml \

cmake \

gcc \

m4 \

icu-devtools

exit # leave root otherwise postgis will choke

cd /tmp

touch download.txt

cat <<EOT >> download.txt

https://gmplib.org/download/gmp/gmp-6.0.0a.tar.bz2

https://github.com/Oslandia/SFCGAL/archive/v1.2.0.tar.gz

http://www.mpfr.org/mpfr-current/mpfr-3.1.3.tar.gz

http://downloads.sourceforge.net/project/boost/boost/1.59.0/boost_1_59_0.tar.gz

https://github.com/CGAL/cgal/archive/releases/CGAL-4.6.3.tar.gz

http://download.osgeo.org/postgis/source/postgis-2.2.0.tar.gz

EOT

cat download.txt | xargs -n 1 -P 8 wget # make wget a little bit faster

tar xjf gmp-6.0.0a.tar.bz2

tar xzf mpfr-3.1.3.tar.gz

tar xzf v1.2.0.tar.gz

tar xzf boost_1_59_0.tar.gz

tar xzf CGAL-4.6.3.tar.gz

tar xzf postgis-2.2.0.tar.gz

CORES=$(nproc)

if [[ $CORES > 1 ]]; then

CORES=$(expr $CORES - 1) # be nice to your PC

fi

cd gmp-6.0.0

./configure && make -j $CORES && sudo make -j $CORES install

cd ..

cd mpfr-3.1.3

./configure && make -j $CORES && sudo make -j $CORES install

cd ..

cd boost_1_59_0

./bootstrap.sh --prefix=/usr/local --with-libraries=all && sudo ./b2 install # there might be some warnings along the way, don't panic

echo "/usr/local/lib" | sudo tee /etc/ld.so.conf.d/boost.conf

sudo ldconfig

cd ..

cd cgal-releases-CGAL-4.6.3

cmake . && make -j $CORES && sudo make -j $CORES install

cd ..

cd SFCGAL-1.2.0/

cmake . && make -j $CORES && sudo make -j $CORES install

cd ..

cd postgis-2.2.0

./configure \

--with-geosconfig=/usr/bin/geos-config \

--with-xml2config=/usr/bin/xml2-config \

--with-projdir=/usr/share/proj \

--with-libiconv=/usr/bin \

--with-jsondir=/usr/include/json \

--with-gdalconfig=/usr/bin/gdal-config \

--with-raster \

--with-topology \

--with-sfcgal=/usr/local/bin/sfcgal-config && \

make && make cheatsheets && sudo make install # deliberately one CPU only

sudo -u postgres psql

sudo -u postgres createdb spatial_template

sudo -u postgres psql -d spatial_template -c "CREATE EXTENSION postgis;"

sudo -u postgres psql -d spatial_template -c "CREATE EXTENSION postgis_topology;"

sudo -u postgres psql -d spatial_template -c "CREATE EXTENSION postgis_sfcgal;"

sudo -u postgres psql -d spatial_template -c "SELECT postgis_full_version();"

You can locate the point easily.

You can locate the point easily. You can hide the infobox.

You can hide the infobox. You can jump to any of the nearest places.

You can jump to any of the nearest places.